Datapeople’s bias guidance helps you avoid content or language in job descriptions that can deter qualified candidates from applying. To help you with both recruiting and compliance.

Datapeople highlights potentially troublesome language in job descriptions. To help you make protected groups feel welcome to apply to your jobs. In fact, bias guidance helps you make everyone feel welcome to apply. Which, in turn, attracts larger, more qualified, and diverse candidate pools.

Datapeople currently addresses 8 forms of bias including: Racism, Tokenism, Ableism, Ageism, Sexism, Nationalism, Religion bias, and Elitism.

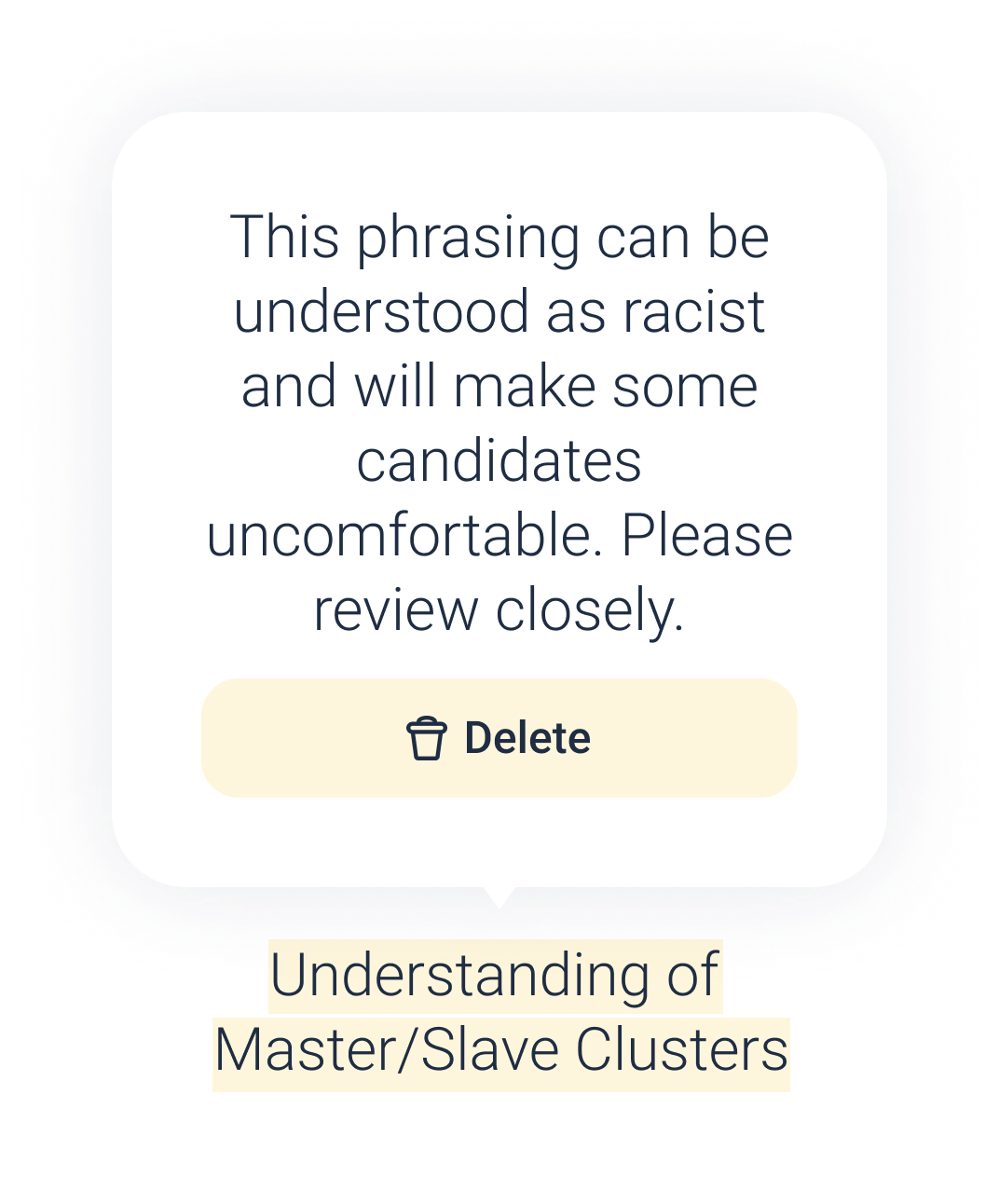

Racism in job descriptions

Despite regulations, racism remains a significant cause of discrimination for candidates in the hiring process and can sometimes creep into your job descriptions.

CRA 1964 makes it illegal to discriminate against anyone because of their race when it comes to employment. However, hiring discrimination based on race is still prevalent. In fact, research suggests that hiring discrimination against African Americans hasn’t improved at all in the last 25 years.

Datapeople’s racism guidance highlights language that can imply a preference for a specific racial identity.

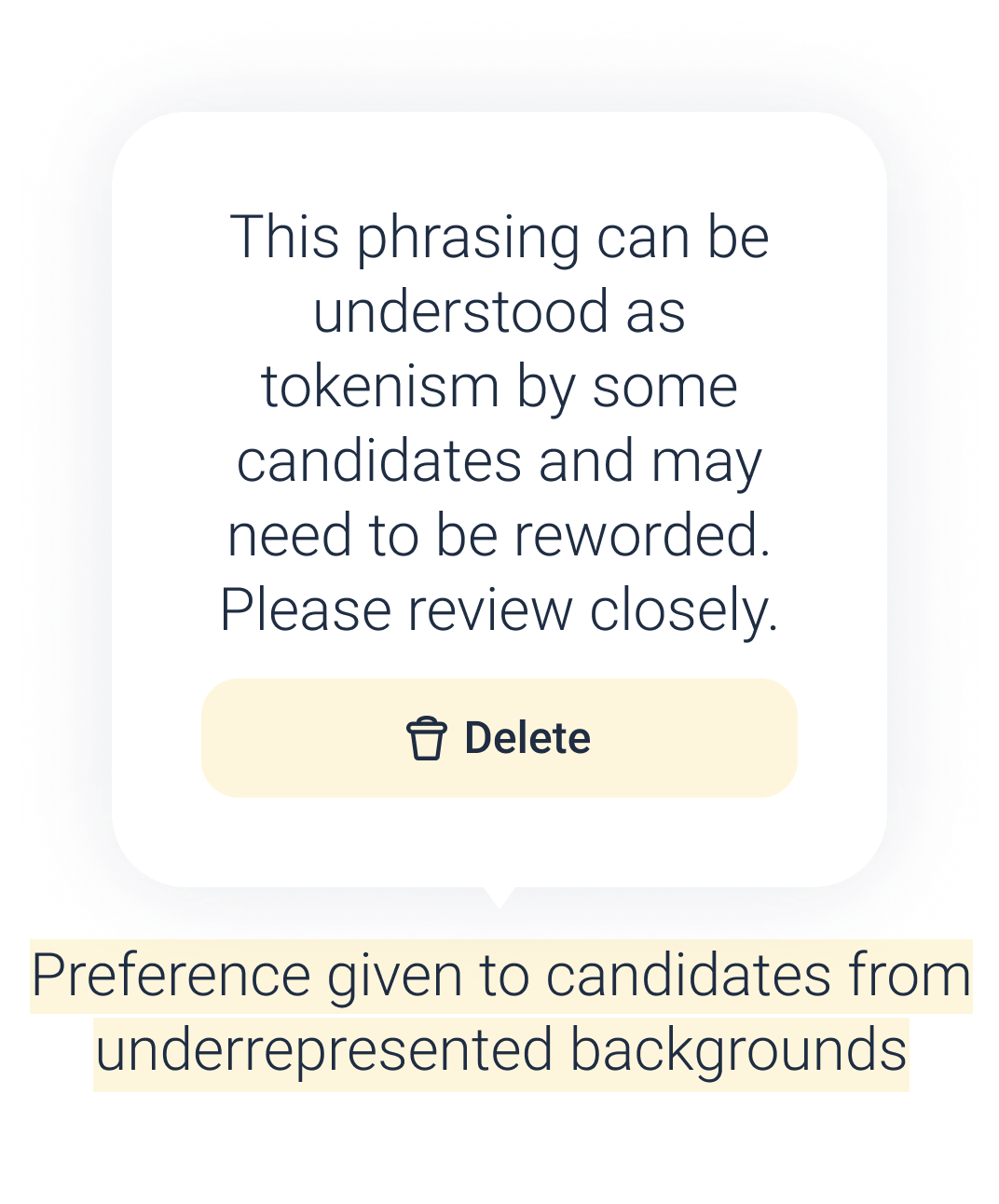

Tokenism in job descriptions

Targeting one group of candidates over another creates inequality, no matter the intent or the makeup of the groups.

Sometimes hiring teams target members of a specific underrepresented group in an attempt to alter the diversity of their candidate pool. But this practice can be discriminatory for another group.

Datapeople’s tokenism guidance highlights language that can imply a preference for certain underrepresented identities.

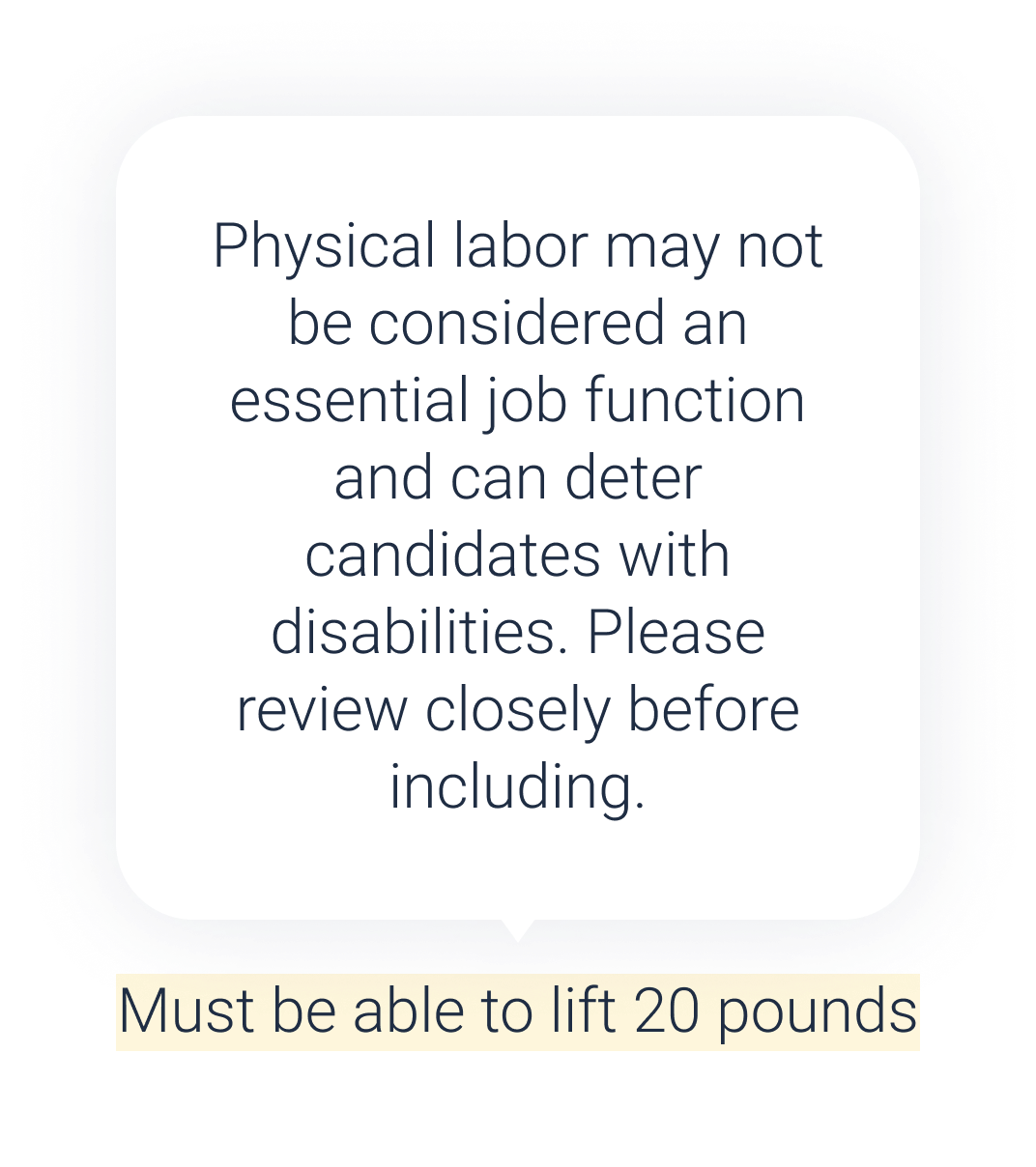

Ableism in job descriptions

Ableism is a great reason to limit job requirements to just the essentials. Requirements that aren’t essential or skills that anyone can easily learn aren’t necessary. And reasonable accommodations under the Americans with Disabilities Act can go a long way.

Is ‘the ability to lift 20 pounds’ essential to a desk job? Because it excludes everyone who can’t lift 20 pounds. Is ‘thrives in a bustling work environment’ a good requirement for people with hearing loss? What about ‘ability to take 10,000 steps a day’ for someone in a wheelchair?

Datapeople’s ableism guidance highlights requirements that may not be essential functions and can deter candidates with disabilities.

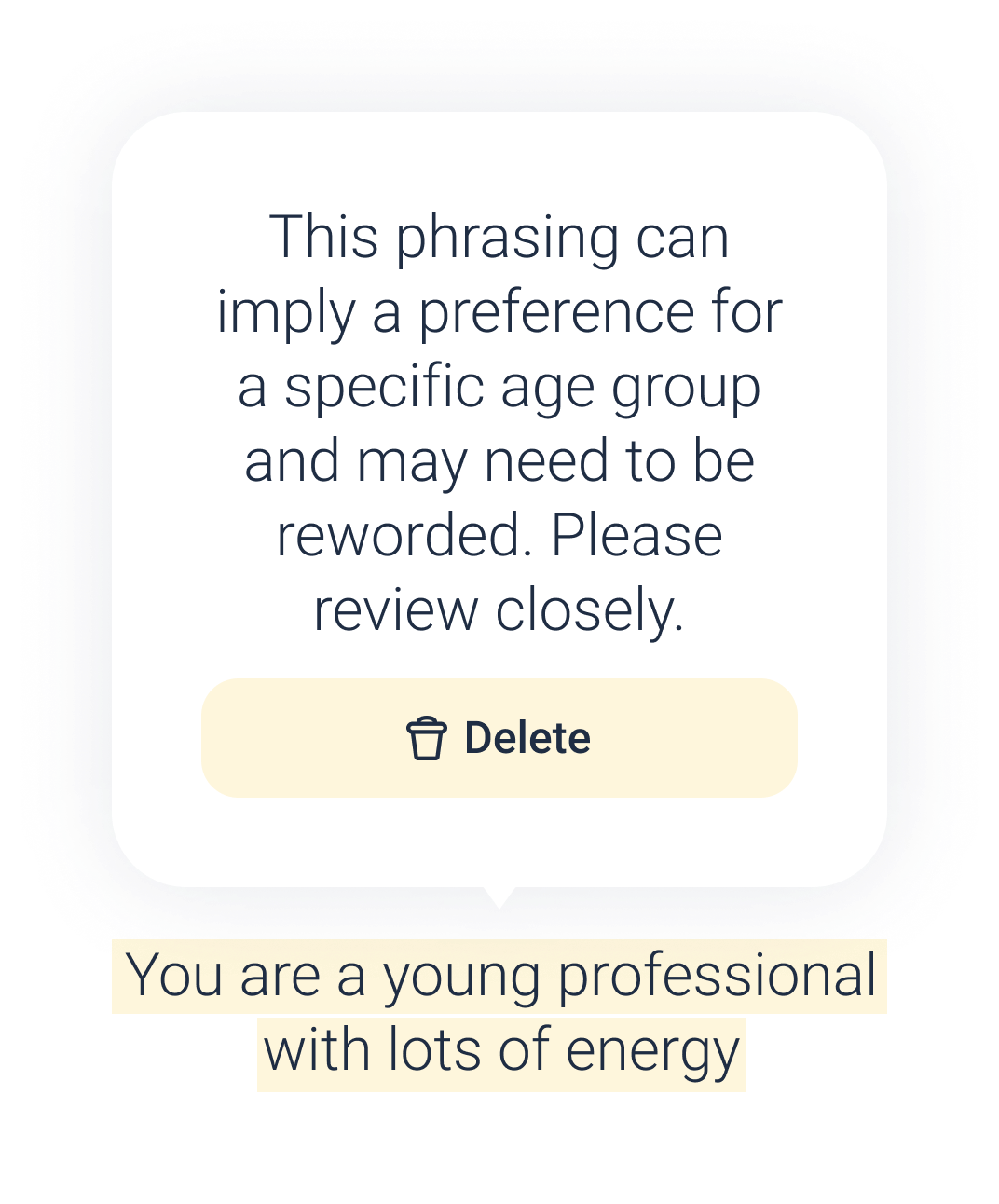

Ageism in job descriptions

Age bias is subtle and can creep into job descriptions without most people noticing. The Age Discrimination in Employment Act currently only addresses bias against older workers. But it happens to younger workers too.

Asking for tech-savvy or seasoned applicants may not sound exclusionary. But tech-savvy can imply younger, while seasoned can imply older. It’s easy to encode ageism into your job without even recognizing it. For example, we tend to imply youth for entry-level roles when the truth is that anyone starting a career (regardless of age) can be entry-level.

Datapeople ageism guidance highlights phrasing that can imply a preference for a specific age group.

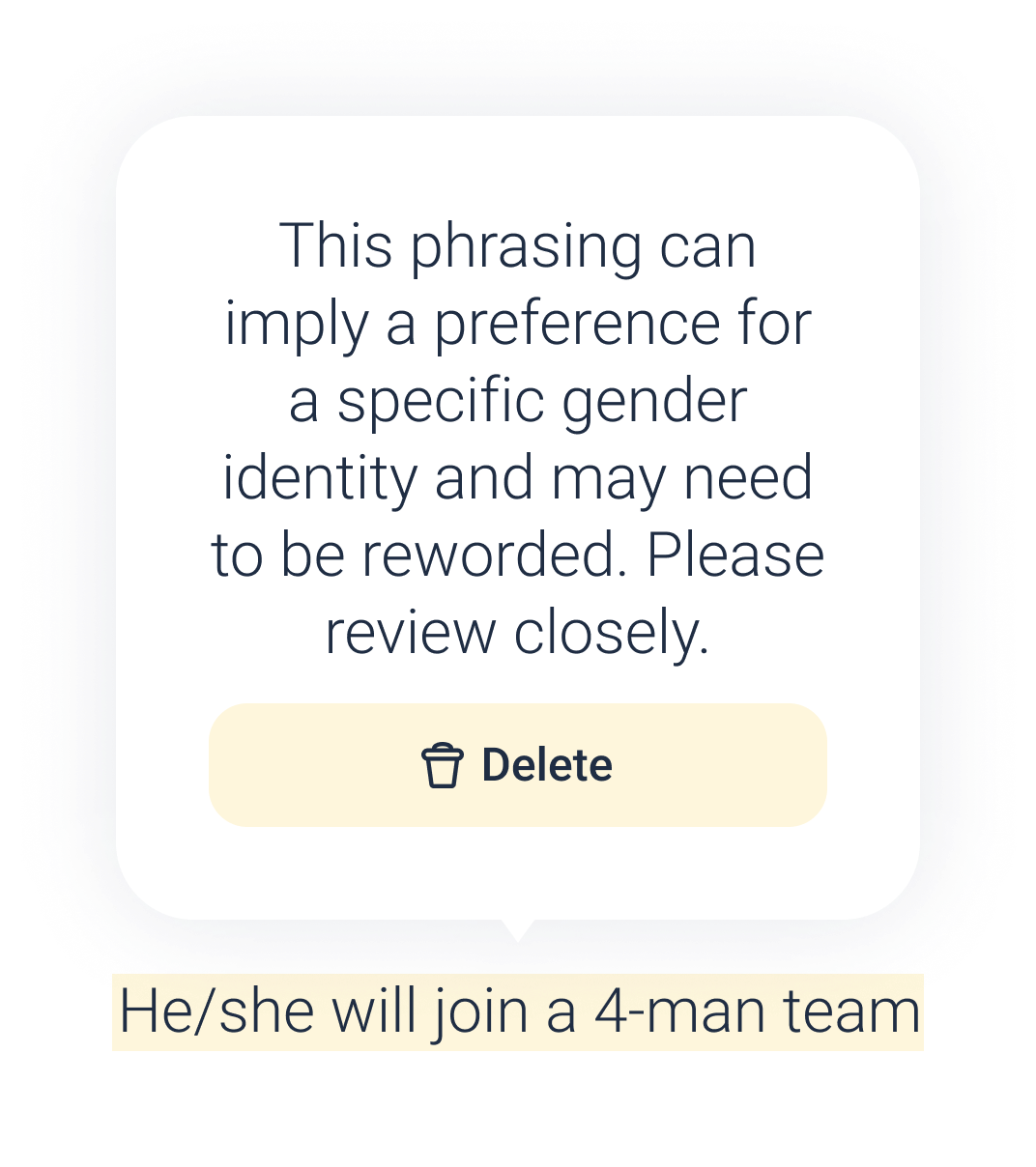

Sexism in job descriptions

Gender bias runs deep and wide in our society. So deep, in fact, that it’s built into a lot of the language we use to describe things. Think mail-man, police-man, jack of all trades.

When you picture a paperboy, do you picture a girl…or a boy? Less obvious, do women view assertiveness as a male or female attribute? Ideally, it would be both, but sentiments like assertiveness, independence, and strength tend to signal masculinity. Female job seekers may infer from that language that the company really wants a man in the position. Although the Civil Rights Act of 1964 (CRA 1964) says that kind of preference is forbidden.

Datapeople sexism guidance highlights phrasing that can imply a preference for a specific gender identity.

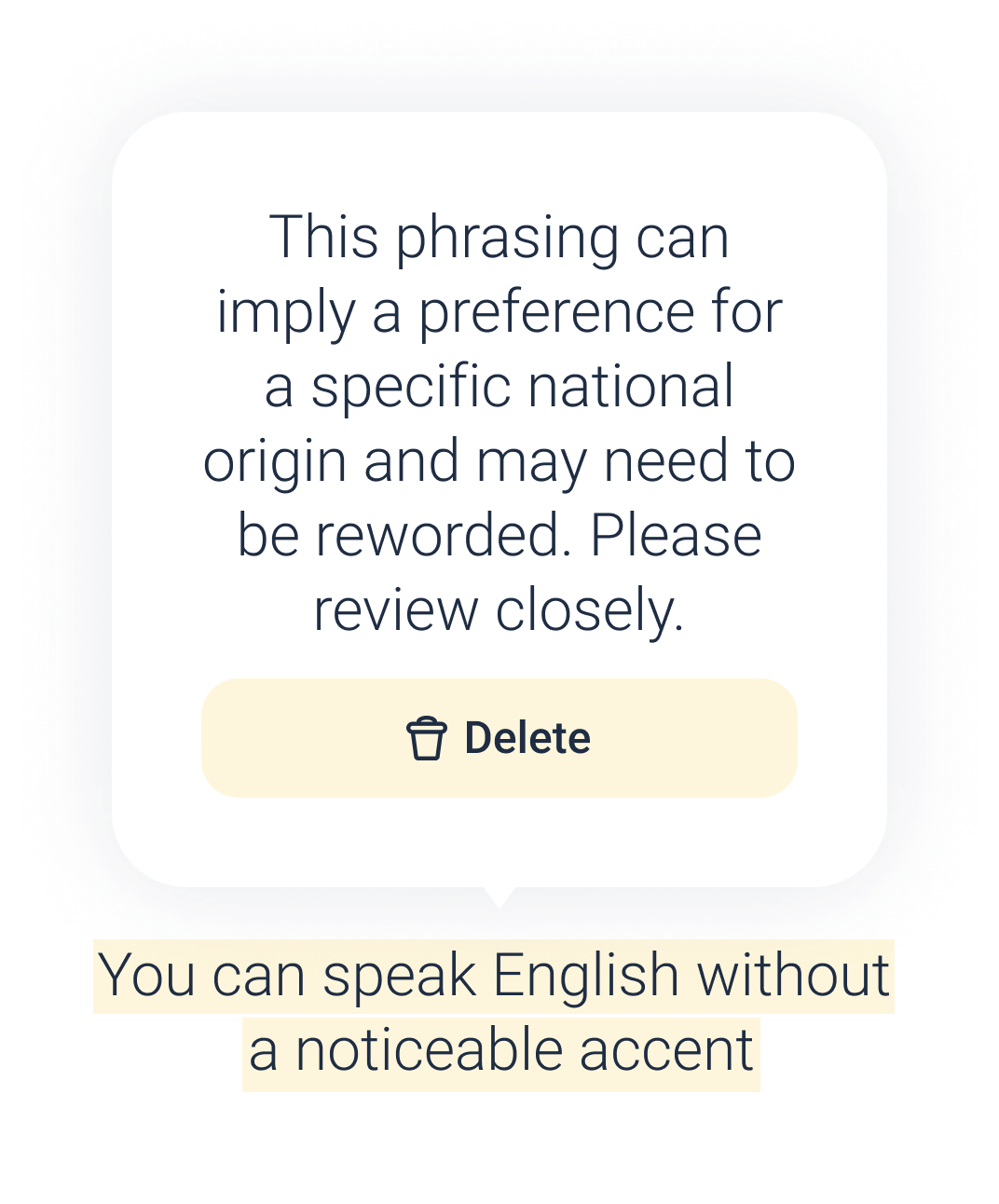

Nationalism in job descriptions

Expats are keenly aware of the potential for bias against them in the workplace. If their looks, accent, clothes, or culture are different, it may not matter that they have the legal right to work in a country.

National origin bias can crop up unintentionally in job descriptions. A requirement for native speaker or even just excellent communicator can spark doubt in a bilingual, bicultural job seeker. Someone reading a job description with either of those requirements may assume the company doesn’t want foreign-born applicants and not apply. Even though CRA 1964 expressly forbids that kind of preference.

Datapeople nationalism guidance lets you know if some of your messaging implies a preference for a specific national origin.

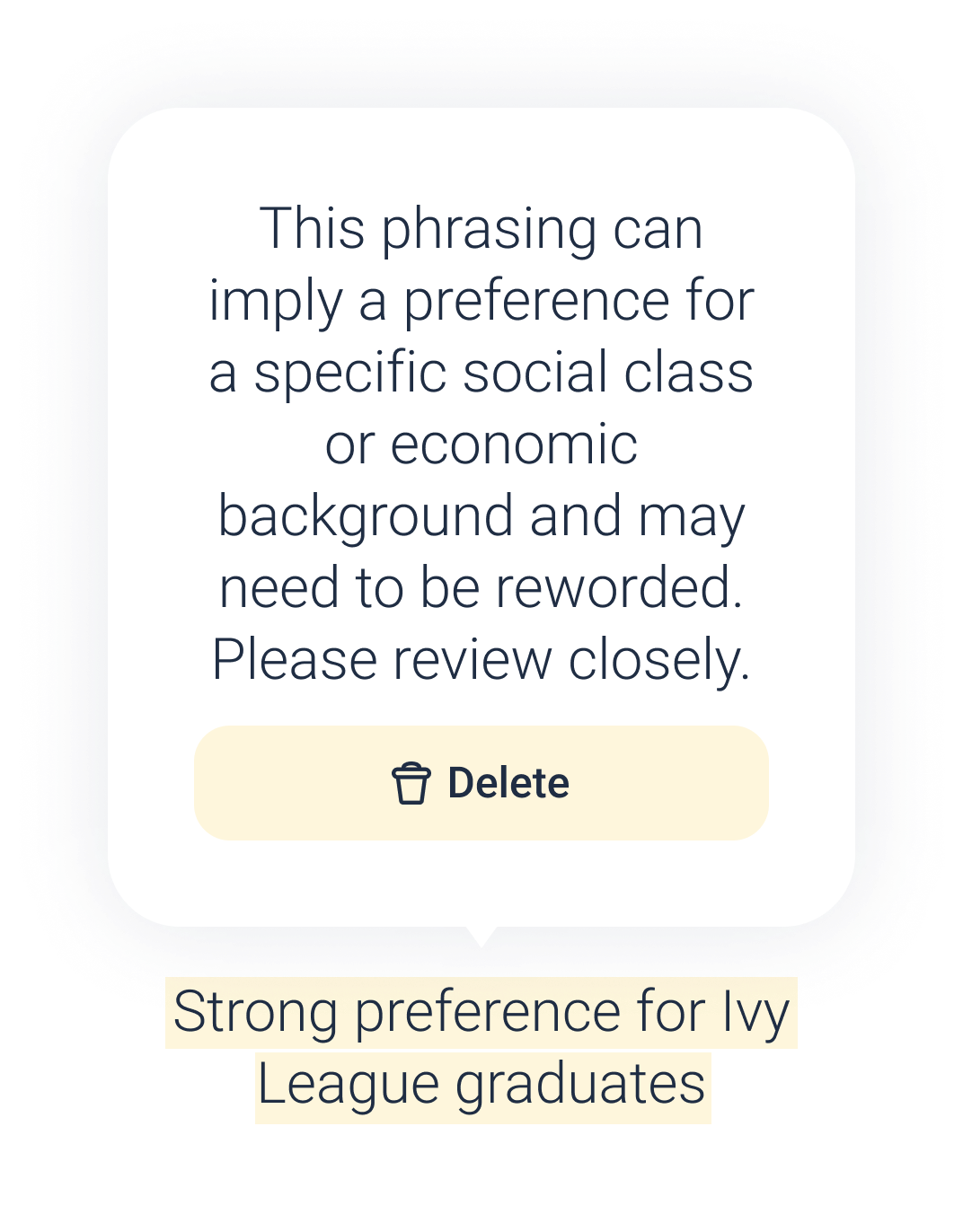

Elitism in job descriptions

This one can be super subtle, but it’s there. Job seekers come from all kinds of backgrounds, and not everyone has the same opportunities. Hiring teams have to be careful not to deter job seekers who have had fewer opportunities in life.

Although socioeconomic bias doesn’t fall under CRA 1964, it’s something your hiring team should avoid. Assuming someone owns a car, for instance. Asking for candidates to perform an unpaid project as part of applying. Requiring an unpaid internship or an Ivy League education as a requirement.

Datapeople’s elitism guidance flags wording that can imply a preference for a specific social class or economic background.

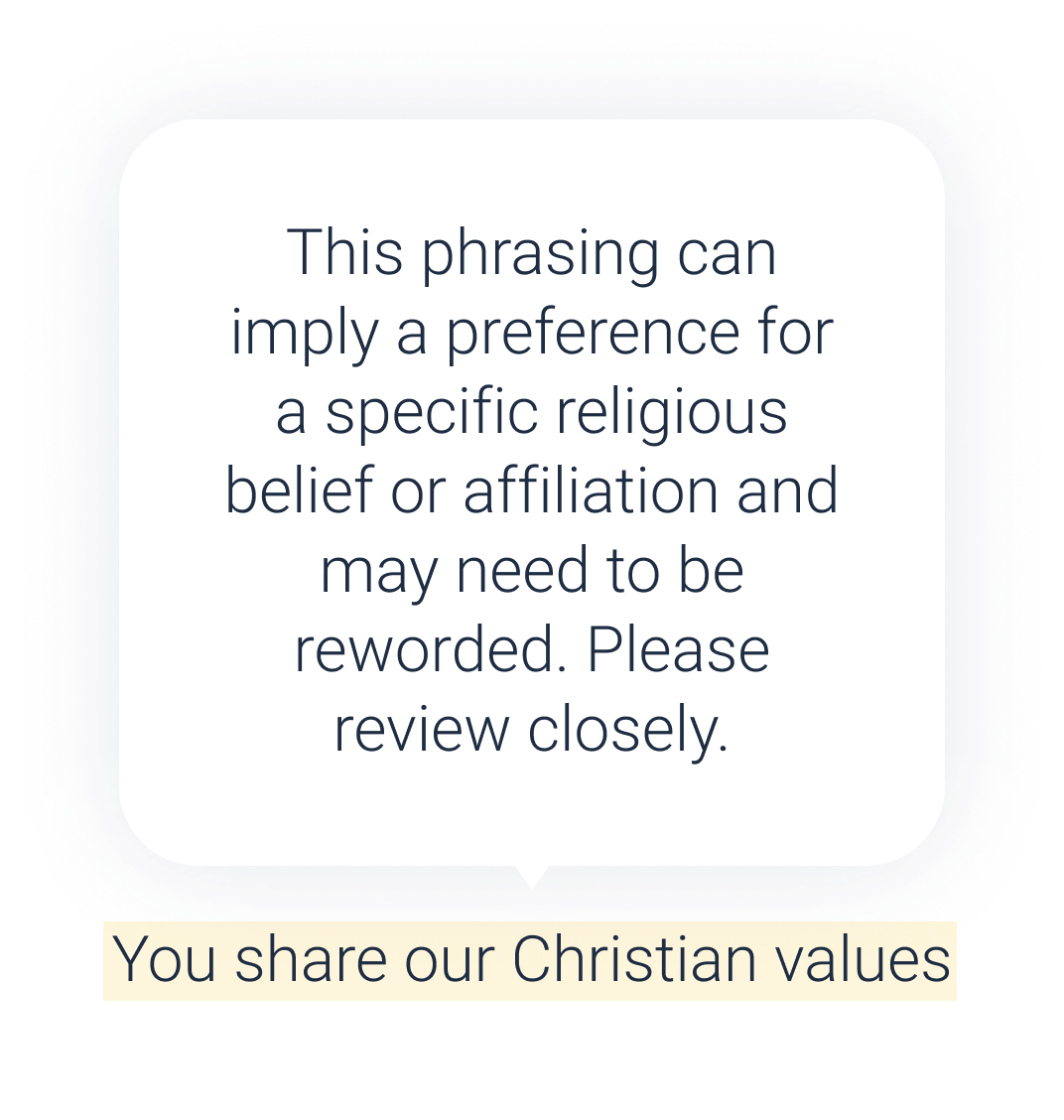

Religion bias in job descriptions

CRA 1964 makes it illegal to discriminate against workers based on religion. It also requires employers to provide reasonable accommodations. Those may apply to clothes, hairstyles, and work schedules. (So, Jewish men can wear yarmulkes, Sikh men can have long hair, and Muslims can take prayer breaks.)

Referring to a particular religion or expressing a sentiment common to a single religion is problematic. By showing a preference for one religion, you may exclude someone from another. By mentioning religion at all, you may deter someone who is agnostic or atheistic.

Datapeople religion bias guidance calls out phrasing that may imply a preference for a specific religious belief or affiliation.

Bias guidance for job descriptions

In the end, you want all job seekers to feel like they would belong at your company. No matter their gender, race, nationality, disability status, or anything else. So you can attract larger, more diverse and qualified candidate pools and improve your diversity, equity, and inclusion efforts. To do that, you have to be able to identify bias in a job description. Datapeople can help with that.