When people think of job descriptions in the context of diversity, equity, and inclusion, they immediately think of gendered language. Probably because the recruiting industry has been talking about it for a few years now. But gender decoding only addresses sexism, not other biases that inadvertently appear in job descriptions.

The Inclusion Meter in Datapeople alerts you when job descriptions skew towards exclusive rather than inclusive. But it didn’t always work that way. In fact, its evolution has mirrored the evolution of our thinking about gendered language and bias, in general.

“Treating different things the same can generate as much inequality as treating the same things differently.”

― Kimberlé Crenshaw, professor of law at UCLA and Columbia Law School, leading authority on civil rights

Removing gendered language is a good start

Gender decoding means avoiding pronouns (‘he’ or ‘she’) and also removing gender-coded sentiments that can deter job seekers. It’s vital because female candidates make up the largest group of historically underrepresented candidates.

But sexism isn’t the only bias. A number of biases can signal to candidates that the company wants someone else. Gender decoding is actually the bare minimum you can do to welcome all candidates.

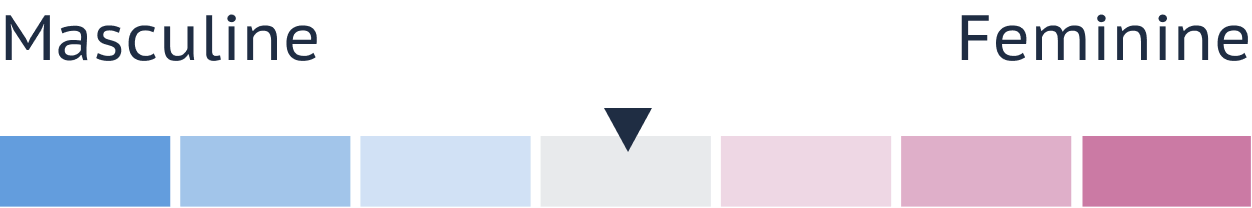

First Release: Masculine versus Feminine

Our original Inclusion Meter was binary: masculine on one end and feminine on the other. But we quickly realized that our thinking was too narrow. It was never a binary measurement because not all women are alike nor are there only two genders.

Furthermore, women aren’t just one thing. In fact, none of us is just one thing. A Black woman is both Black and female. A white woman is both white and female. And a white man is both white and male. (The list goes on, obviously.)

While a white woman may experience sexism, she may not experience racism. Conversely, a Black woman may experience both sexism and racism. And a Black woman with a disability may experience sexism, racism, and ableism (individually or all at once, compounding the bias).

We realized that to truly get a handle on bias, we had to broaden our perspective quite a bit. We also realized that our gendered language meter was providing a way for people to game the system.

Our intent was to give hiring teams a way to avoid masculine-coded language and increase applications from qualified women. However, less sensitive hiring teams could also use the meter to promote masculine-coded language and decrease applications from qualified women.

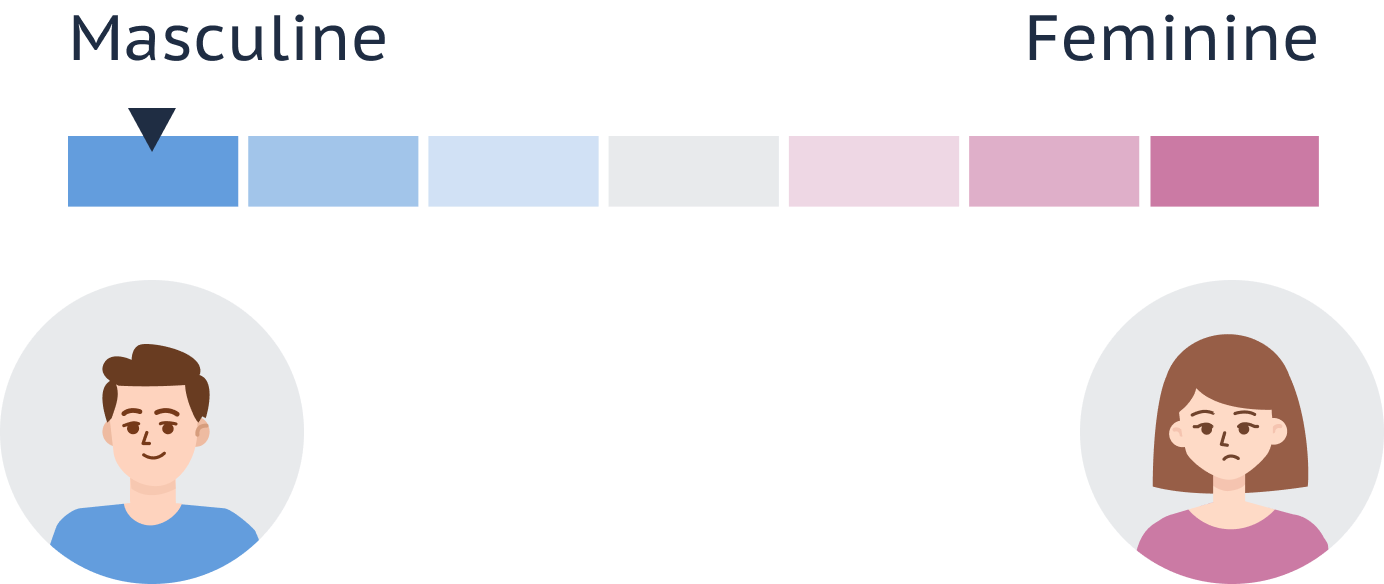

Second Release: Gendered versus Neutral

The second version of our Inclusion Meter replaced the masculine versus feminine scale with a neutral versus gendered measurement. The new version still addressed gendered language but did it without enabling hiring teams to target either women or men.

That’s an important point because, in the end, it’s about attracting a representative candidate pool, not a 50/50 split between men and women. For example, you would never expect an even split for an engineering role when the engineering talent market is mostly male. We felt the change enabled us to alert Datapeople users to gender-coded language without giving anyone a way to directly harm specific candidate groups.

However, as we mentioned earlier, gender representation is only one part of the problem. Other biases forced us to rethink our Inclusion Meter altogether. Here’s a quick rundown of some of those biases:

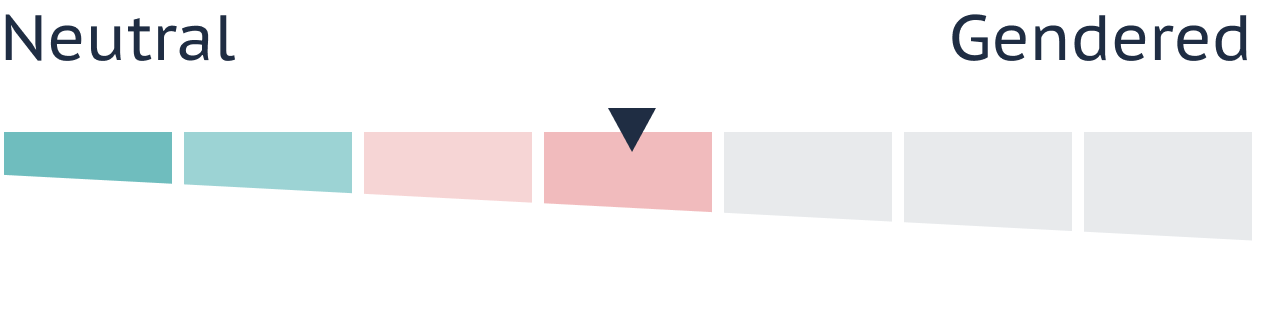

Racism

Bias against certain racial identities. According to research, hiring discrimination against Black candidates hasn’t improved at all in the last quarter-century.

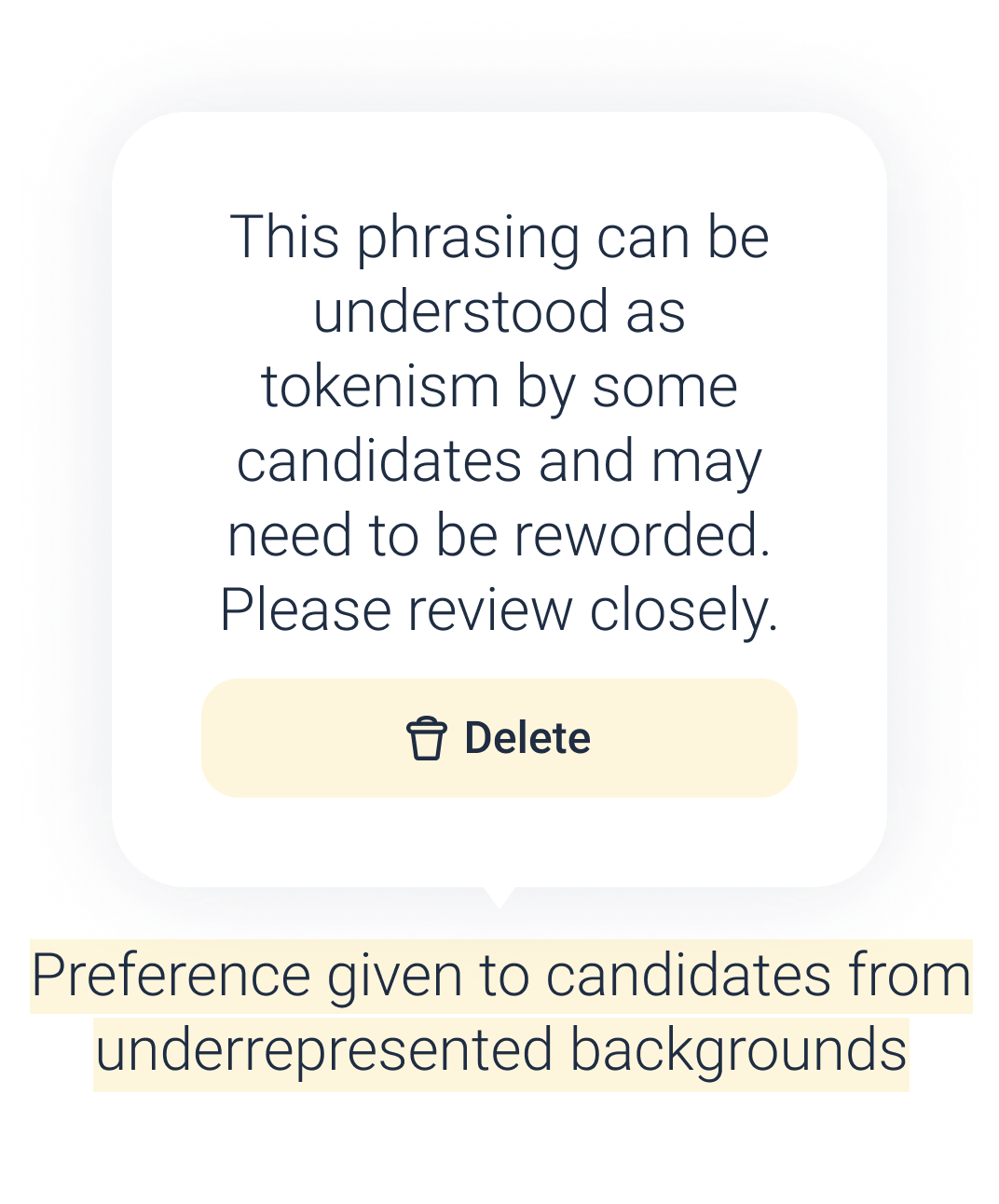

Tokenism

Bias in favor of certain underrepresented identities. When hiring teams target members of a specific underrepresented group in an attempt to alter the diversity of their candidate pool.

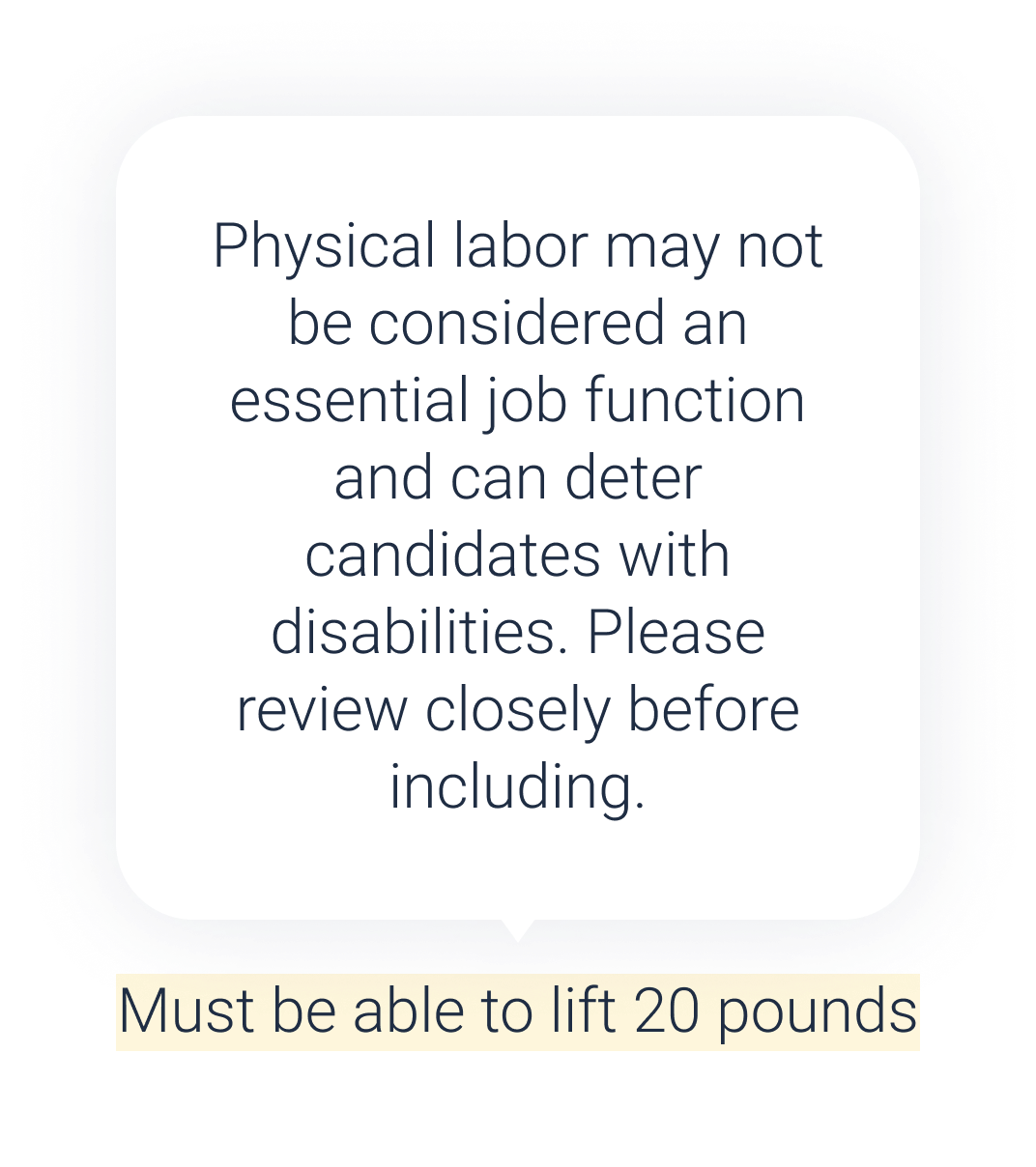

Ableism

Bias towards or against a certain level of physical ability. Hiring teams will sometimes inadvertently include requirements that aren’t necessary but end up deterring otherwise qualified candidates.

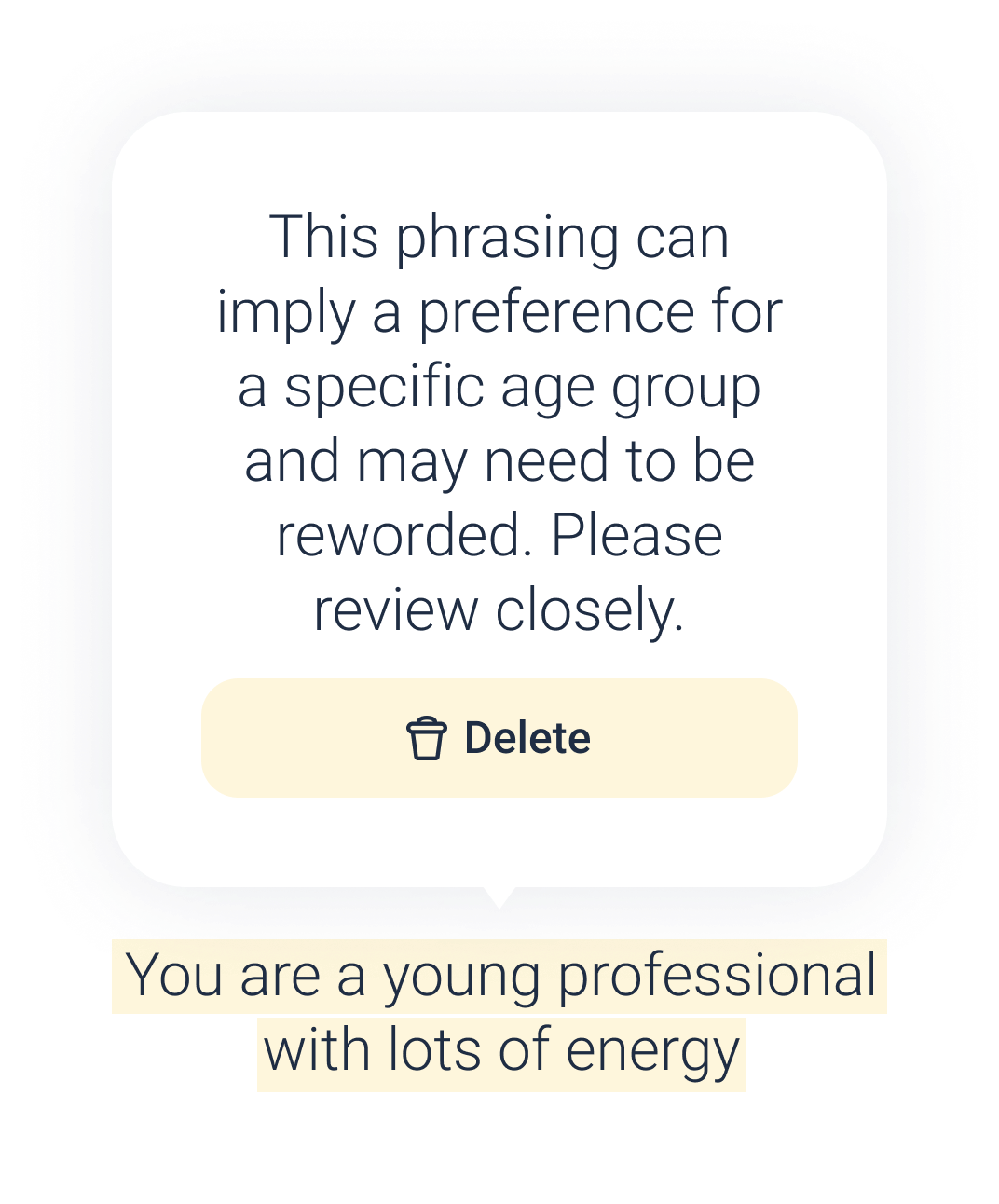

Ageism

Bias for or against a specific age group. Ageism primarily refers to bias against older workers (e.g., ‘digital fluency’) but can apply to younger workers too (e.g., ‘seasoned’).

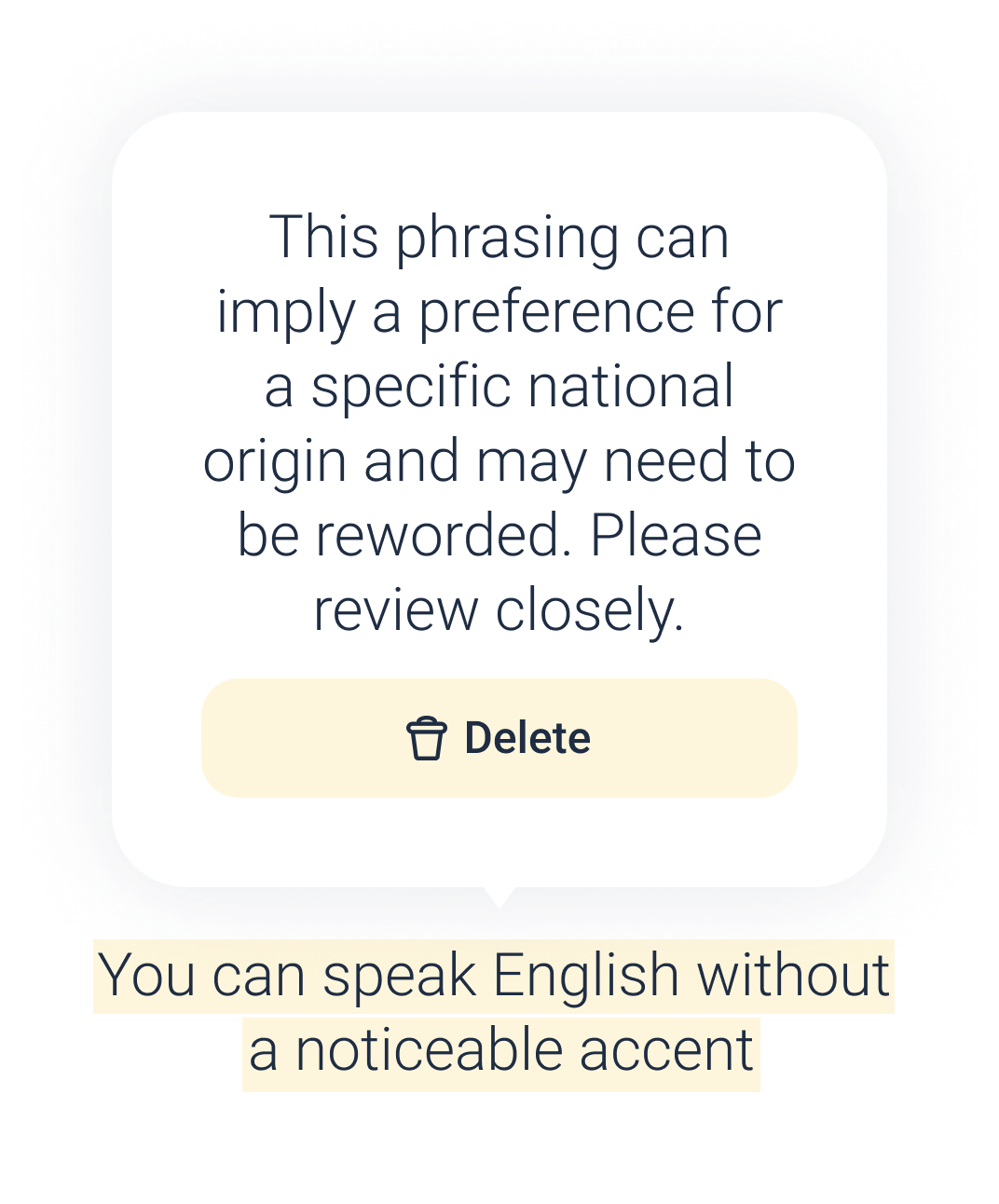

Nationalism

Bias towards or against a particular national origin. Foreign-born workers may perceive a positive bias towards natives (e.g., ‘native speaker’) and not apply.

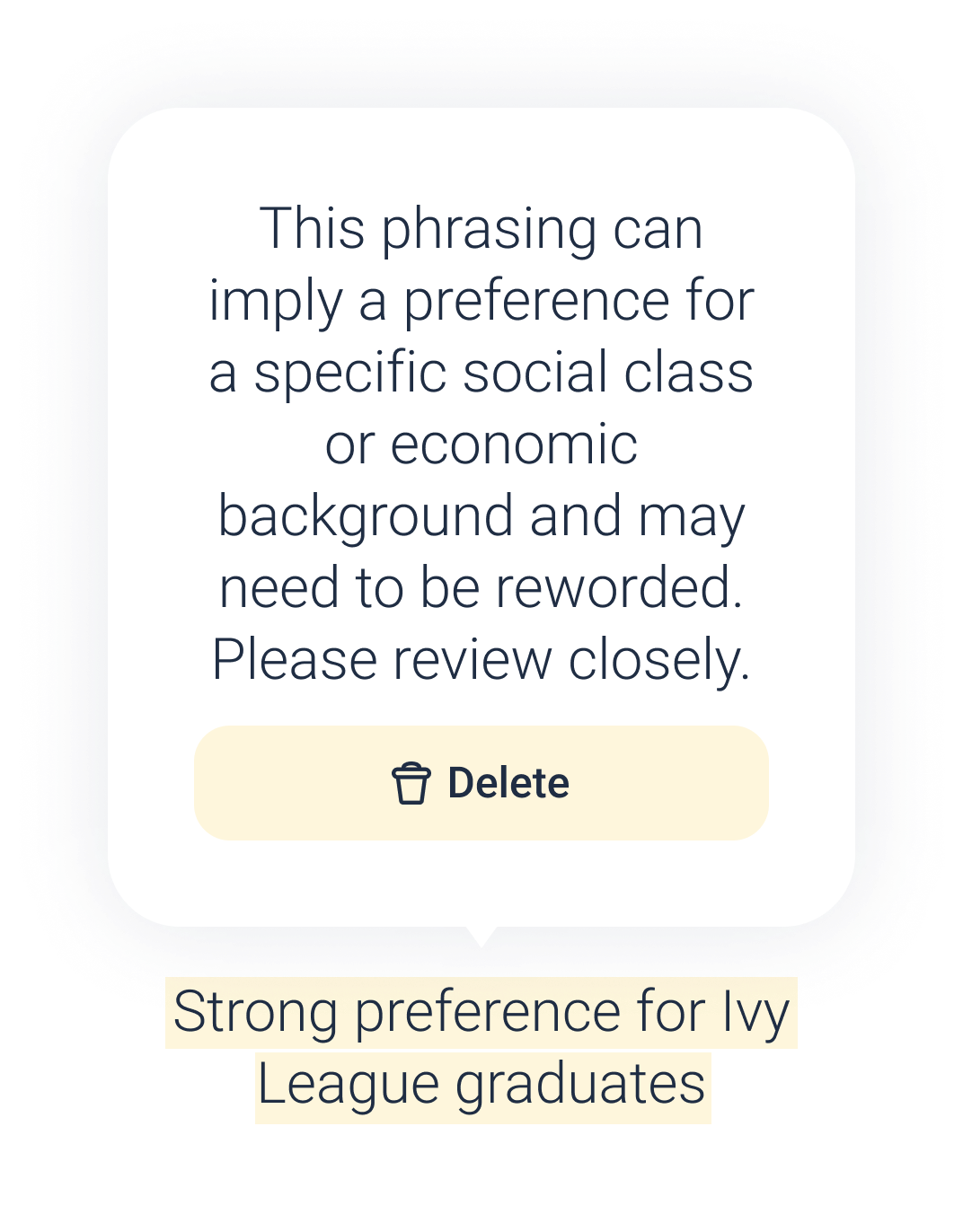

Elitism

Bias for or against a certain socioeconomic background. Job seekers from less privileged backgrounds don’t always have the same opportunities as other candidates (e.g., an Ivy League education).

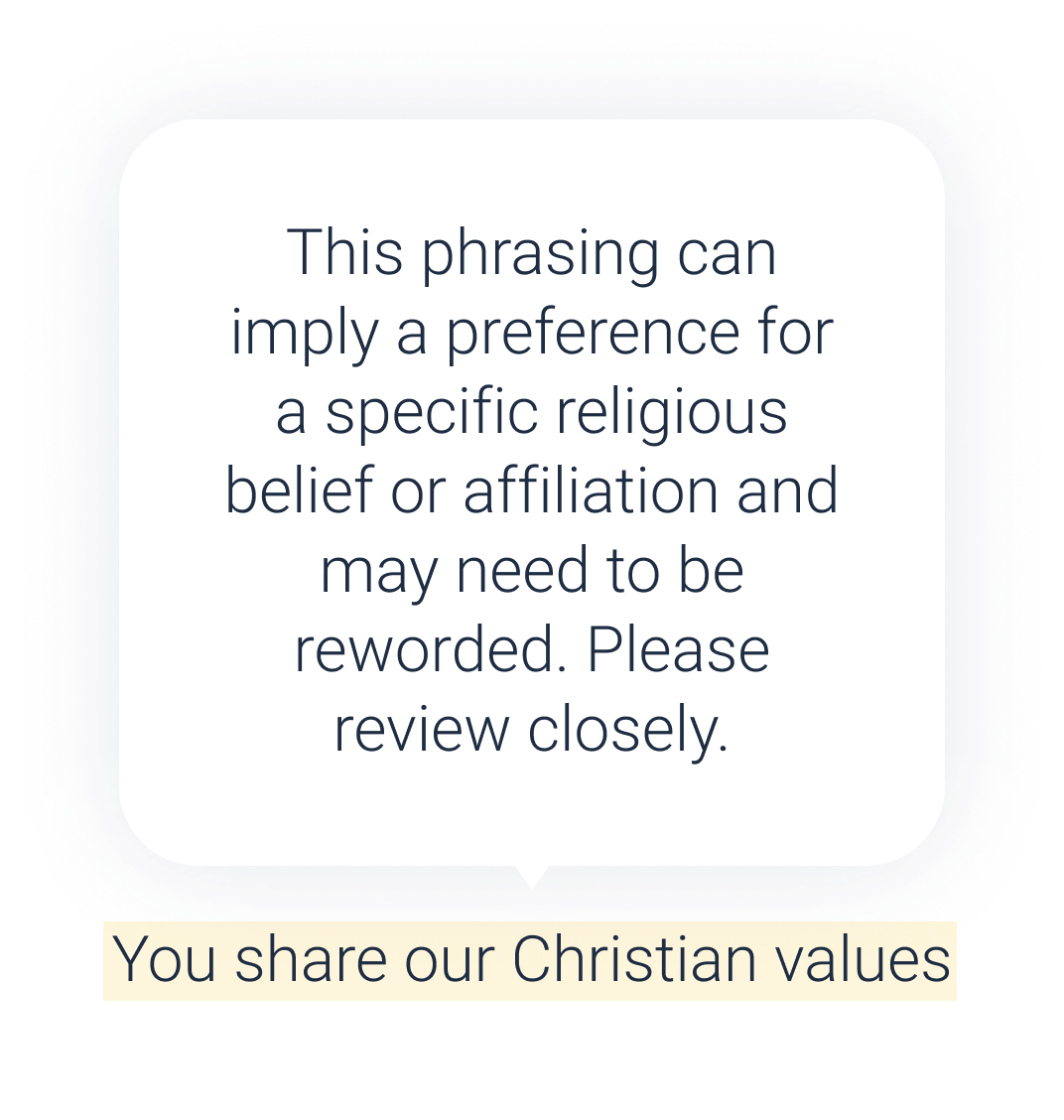

Religion

Bias towards or against one religion or religion, in general. Implying a preference can result in perceived bias.

Today: Inclusion versus Exclusion

We realized that, as our content and language guidance expanded to address a variety of biases, our meter needed another update.

Today, our Inclusion Meter now gauges how inclusive your job description is to all candidates. On one end is inclusive, on the other is exclusive. The meter addresses not only language that can impact gender representation but also language around race, sexism, physical ability, age, nationality, socioeconomic background, and religion. In short, it identifies language and content that may confuse or deter candidates, helping teams improve the inclusivity of their hiring processes.

Make every qualified candidate feel welcome to apply

Without question, eliminating biased language of any kind is vital (so is organic sourcing). Inadvertently including biased language in job descriptions can diminish your candidate pool. Reducing the number of candidates who apply, as any recruiter knows, lowers your chances of finding a qualified candidate.

If you’re trying to attract all qualified candidates, the language in your job descriptions has to be inclusive. Not just inclusive to women, but inclusive to everyone regardless of their age, race, socioeconomic status, nationality, or physical ability. At Datapeople, we’re still addressing gendered language with our latest Inclusion Meter. It’s just that now we’re looking at six other biases as well.

We invite you to try out our job description editor or schedule a demo to see our new Inclusion Meter in action.